Creating an AI that composes melodies helped me understand how structure and randomness interact in music. My goal was to see if a model can learn patterns that feel musical, not just mathematically correct.

Background Info

The task of generating melodies is a sequential prediction problem, much like that of language modeling. Rather than predict one word at a time, the model will generate individual musical notes using pitch, length, and timing information. Recurrent Networks and Transformers are both commonly used by AI composers due to their efficiency in handling sequential data. The greatest challenge in this area is developing models that produce both repetitive and varied structures. These models must also create a sense of overall “phrase” or phrasing. This ensures the piece does not feel random but rather as if it was intentionally created.

My own Melody Generator was developed as part of a larger research project exploring AI Assisted Music Production. My primary goal was not to create an AI system capable of replacing human creativity with its own. Instead, I aimed to better understand which aspects of creative processes could be emulated. I also sought to see how they could be inspired through the use of algorithmic processes.

Technical Insight

I created this model based on hundreds of small pieces of MIDI data, all of the same key and tempo. Each piece was encoded by converting the notes into tokens representing both the pitch and time step of the note. Prior to training the model, I decided to experiment with using a Long Short-Term Memory (LSTM) network because LSTMs are capable of learning patterns over time, which is relevant to music, without being computationally expensive.

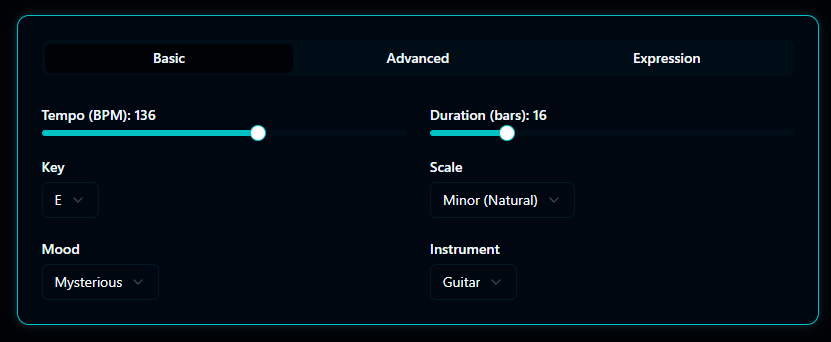

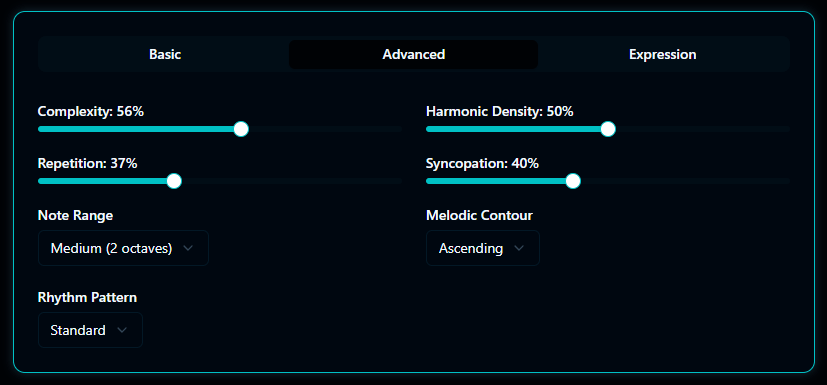

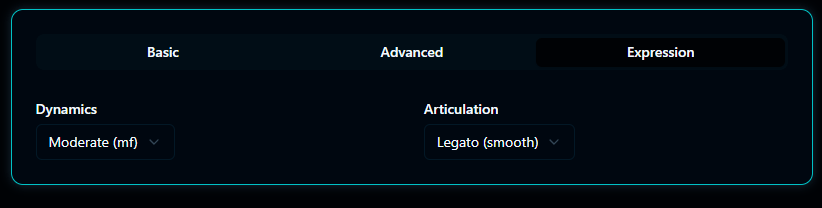

During the training phase, I was able to train the model by reducing the difference in predictions between what the model would predict as its next output and what the next actual note would be in a given sequence. Giving it many different parameters to create the melody tailored to the specific needs of the user.

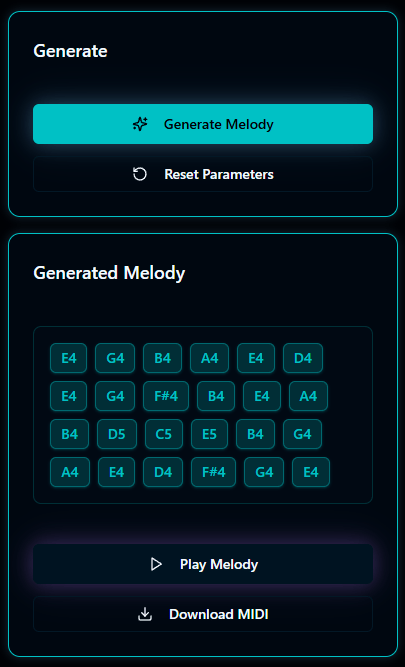

Once the model had been trained I saved the generated MIDI output and imported the file into FL Studio and layered the synthesized melody with drum elements and other synthesizers to see how it interacted with pre-existing human composed sections. Results varied greatly but provided interesting insights into the model’s capabilities.

While many of the generated melodies lacked an overall structure, others developed unexpected but cohesive musical themes that could have served as anchors for larger musical compositions.

Designing the melody generator showed me how musical logic can be represented as data. Each note becomes a probability, yet the results still evoke emotion. AI can mimic structure, but the creative interpretation remains human. It taught me that algorithms are best used not as composers, but as collaborators that reveal new ways to think about sound.